Camera

Cameras are the most important nodes, because you need at least one Camera in the graph to render anything. For every frame, Cameras will get all of the renderable geometry and lights from the graph and render them.

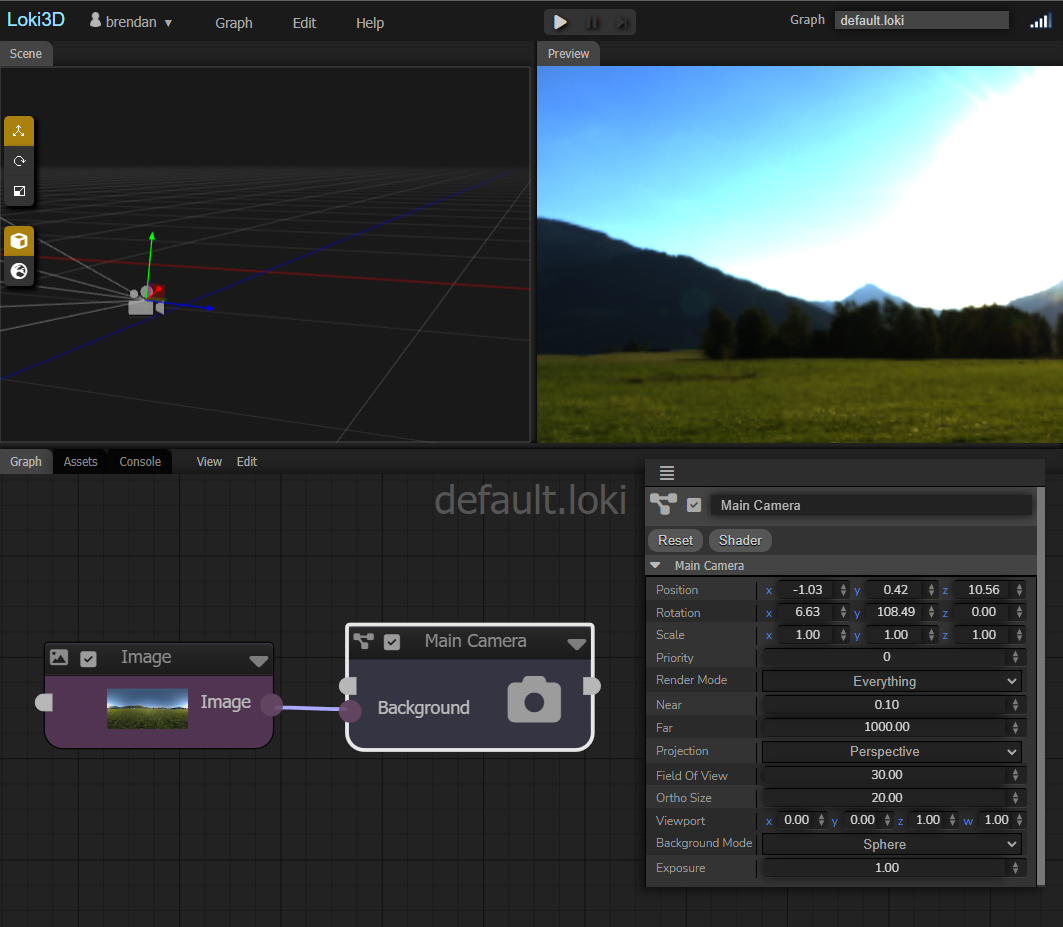

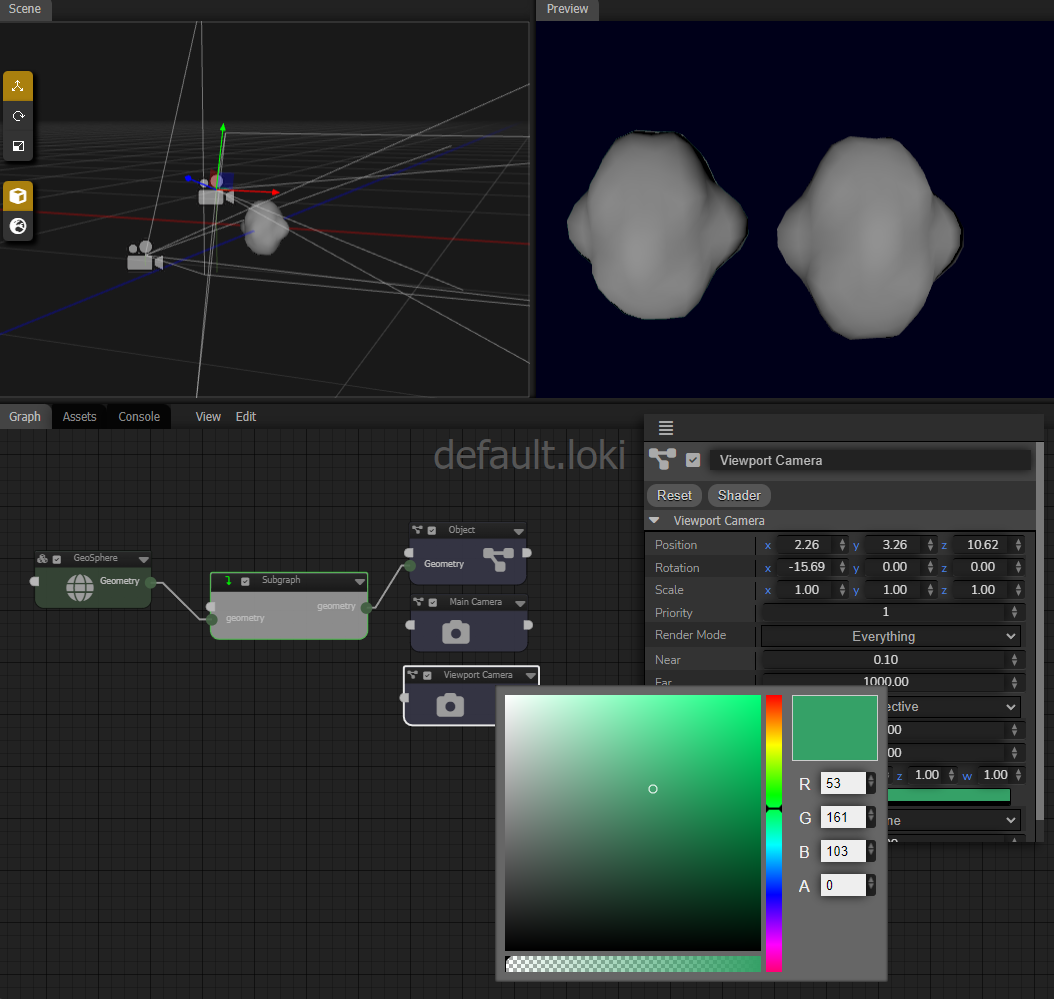

Camera Background

Cameras have a Background property that defines how it renders the background behind objects. By default, the camera's background will be a solid color. You can connect Image or Material nodes to the Background socket for more interesting backgrounds.

Besides the Background property, there is also the Background Mode property that determines how the background is rendered. By default, it renders as a background plate Plane. You can also render the background as a Sphere or a Cube, if you're using a CubeMap or a Material as the Background.

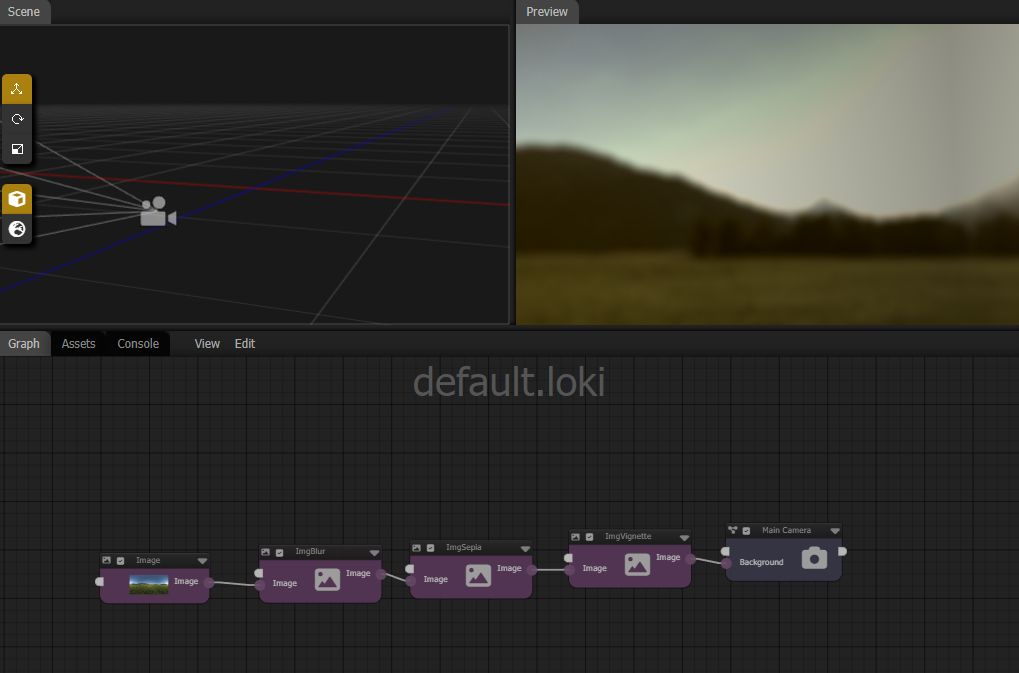

Image Background

You can also connect Image nodes to the Background of a Camera. If you're just doing an image processing project, you don't even need any geometry in the scene; connecting image nodes to the Camera Background is all you need.

You're not limited to a direct Image connection to the Background, but you can connect the end node of a full image processing graph.

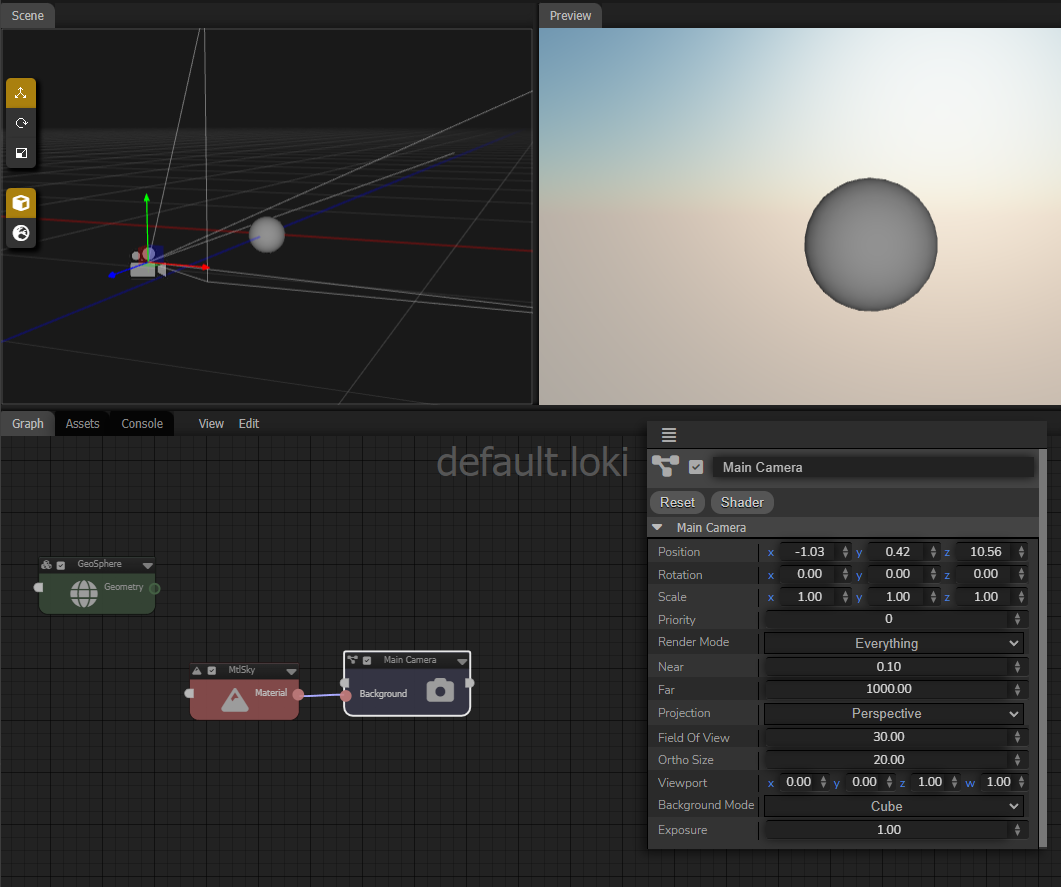

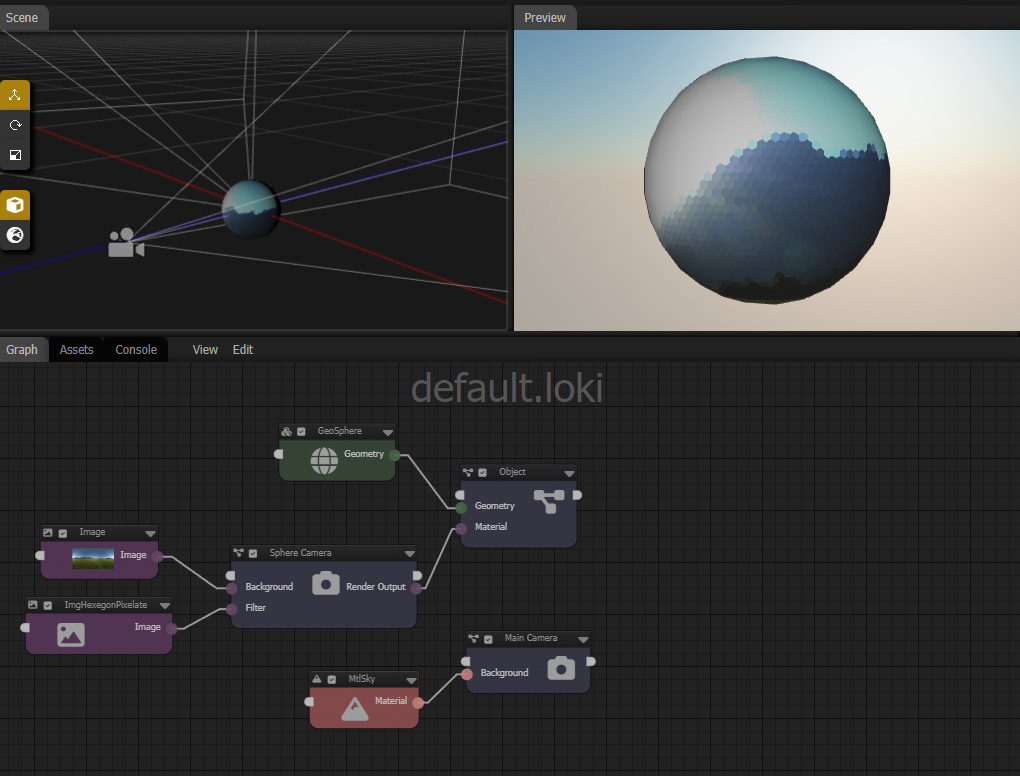

Sky Background

You can connect any Material node to the Background socket, but an interesting Material is the Sky Material node. With a Material Background, you need to use the Cube or Sphere Background Mode.

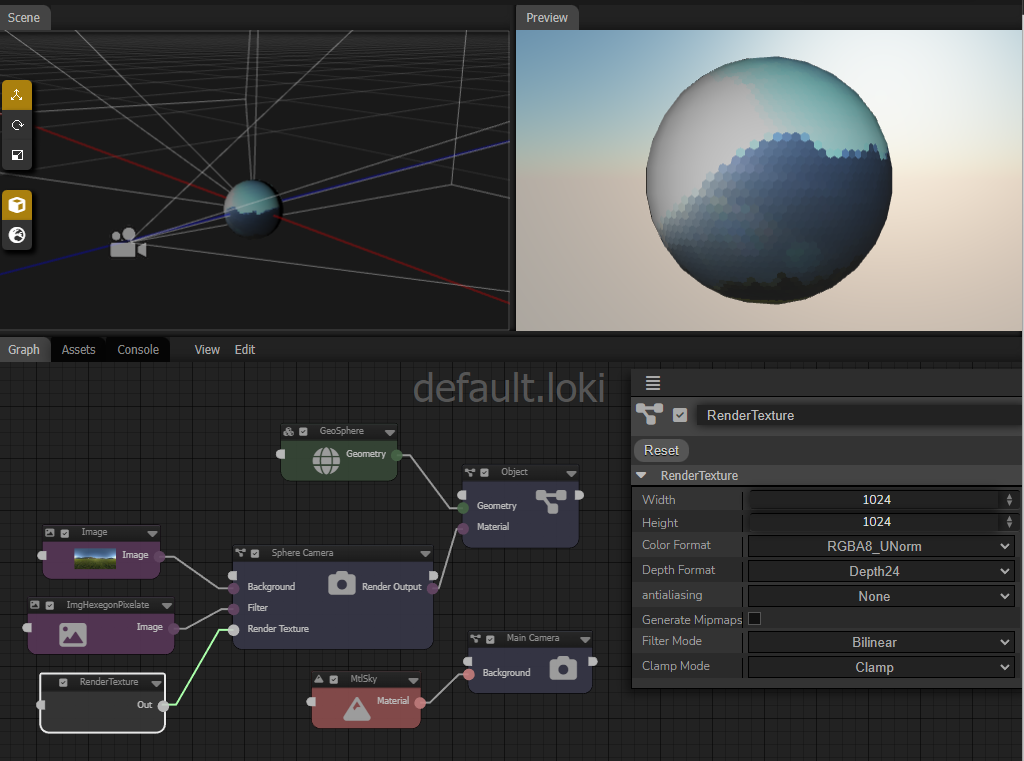

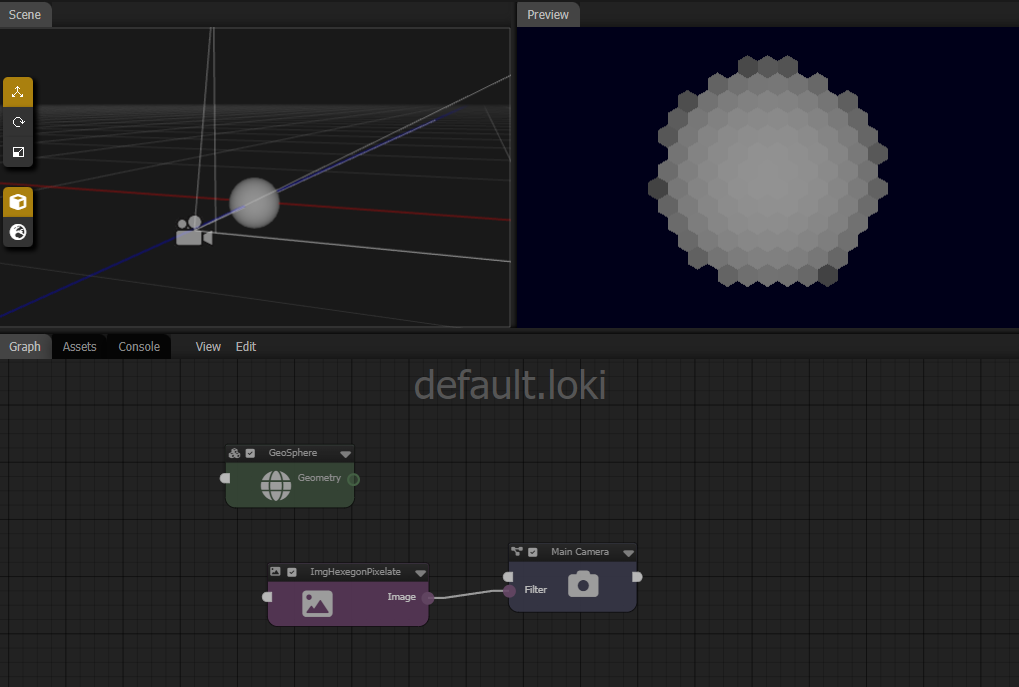

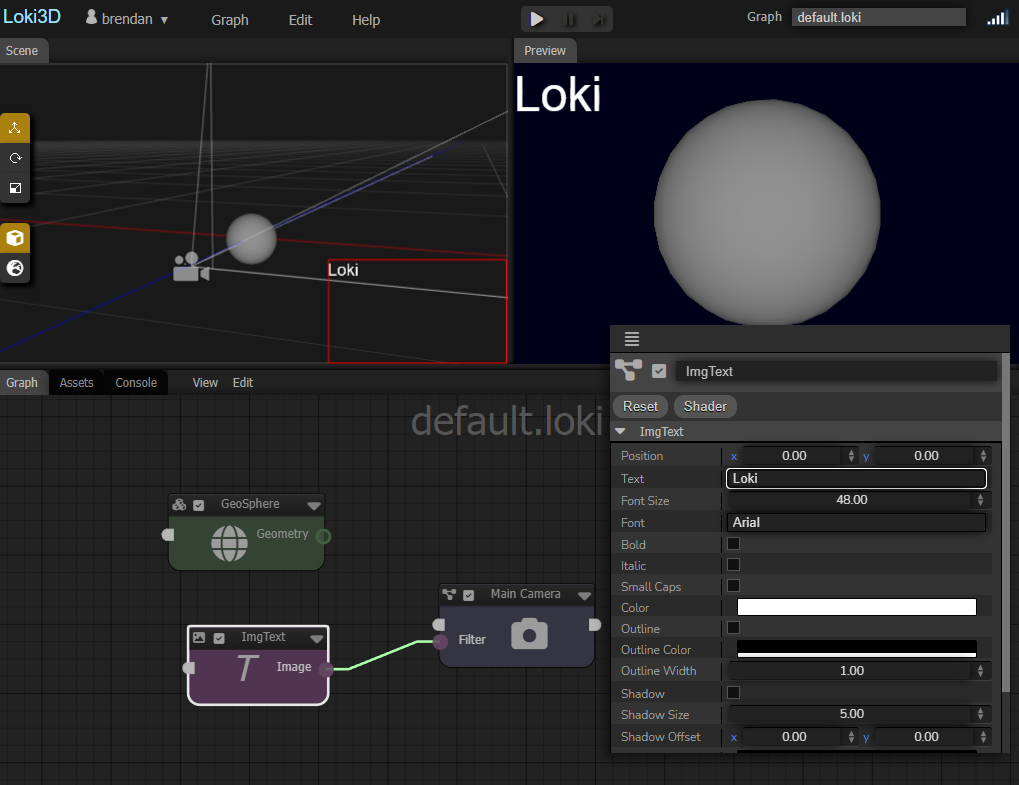

Camera Filter

Cameras can do Image Processing or Compositing on the result of the render with camera's Filter property. Image nodes can be connected to the Camera's Filter socket. If an Image node is a filter node that takes an input, and no input is connected to it, then it will implicitely get the result of the render from the camera as an input.

Image nodes connected to Filter will be alpha composited on top of the camera render, so you can layer images on top of the render. If an opaque image is used as the Filter, it will draw over the sceen.

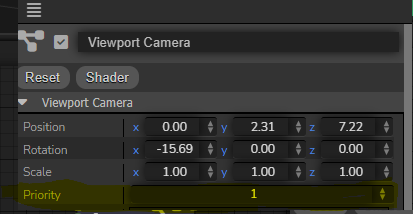

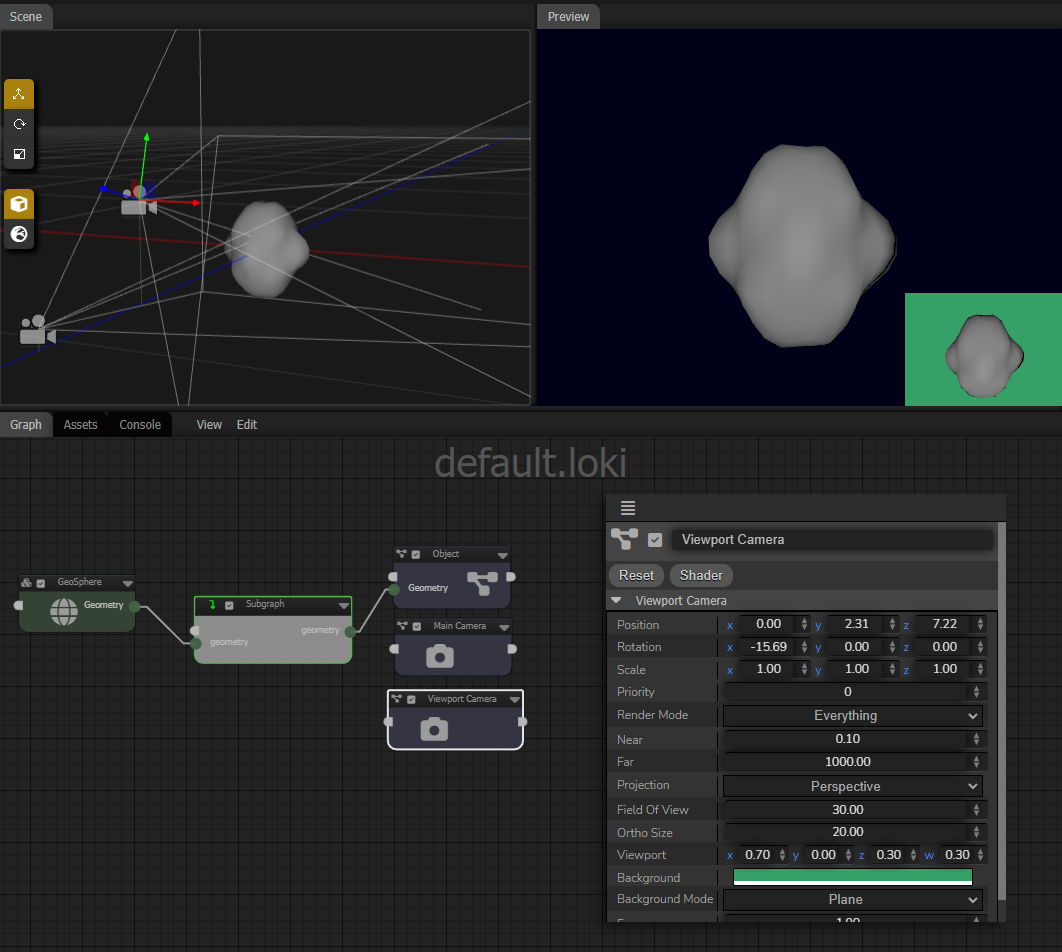

Multiple Cameras

You can have more than one camera in the graph. Each camera will render to the display, and by default a second camera will render on top of the first camera.

Camera Priority: By default, all cameras have the same rendering priority and will be rendered in the order they were added to the Graph. You can control the order cameras will be rendered with their Priority property. Cameras with higher Priority values will render after cameras with lower Priority values.

There are several things you can do with multiple cameras:

-

Viewports: Each camera has a Viewport property which defines the area of the

display it will render into, in normalized device coordinates. You can have a second

camera with a viewport covering a smaller area of the display, to render as an overlay

camera.

-

Transparent Background: You can set the background color of a Camera to be

transparent or semi-transparent, and it will be composited on top of previously

rendered cameras.

Camera Render Texture

A Camera has a Render Output output socket that is an Image output. That means the camera's render output can be connected anywhere that takes an Image input, such as a material texture. If a camera has a connection to Render Output, it will not render to the display.

Custom Render Texture

By default, a camera with a Render Output connection will render to a texture with the same resolution and format of the display. You can overwrite this to render to a custom render texture by connecting a Render Texture node to the Render Texture input socket of the camera. A RenderTexture node lets you specify the resolution and format of the texture the camera will render into, which then gets output through the Render Output socket.